Hugging Face releases a benchmark for trying out generative AI on fitness duties

Generative AI fashions are increasingly being brought to healthcare settings — in some instances upfront, in all probability. Early adopters consider that they’ll liberate higher potency past revealing insights that’d another way be ignored. Critics, in the meantime, indicate that those fashions have flaws and biases that might give a contribution to worse fitness results.

However is there a quantitative approach to know the way useful, or destructive, a style may well be when tasked with such things as summarizing affected person information or answering health-related questions?

Hugging Face, the AI startup, proposes an answer in a newly released benchmark test called Open Medical-LLM. Created in partnership with researchers on the nonprofit Obvious Moment Science AI and the College of Edinburgh’s Herbal Language Processing Staff, Obvious Clinical-LLM targets to standardize comparing the efficiency of generative AI fashions on a area of medical-related duties.

Obvious Clinical-LLM isn’t a from-scratch benchmark, in keeping with se, however instead a stitching-together of current take a look at units — MedQA, PubMedQA, MedMCQA and so forth — designed to probe fashions for common clinical wisdom and connected disciplines, comparable to anatomy, pharmacology, genetics and medical observe. The benchmark accommodates more than one selection and open-ended questions that require clinical reasoning and figuring out, drawing from subject matter together with U.S. and Indian clinical licensing tests and school biology take a look at query banks.

“[Open Medical-LLM] enables researchers and practitioners to identify the strengths and weaknesses of different approaches, drive further advancements in the field and ultimately contribute to better patient care and outcome,” Hugging Face wrote in a weblog submit.

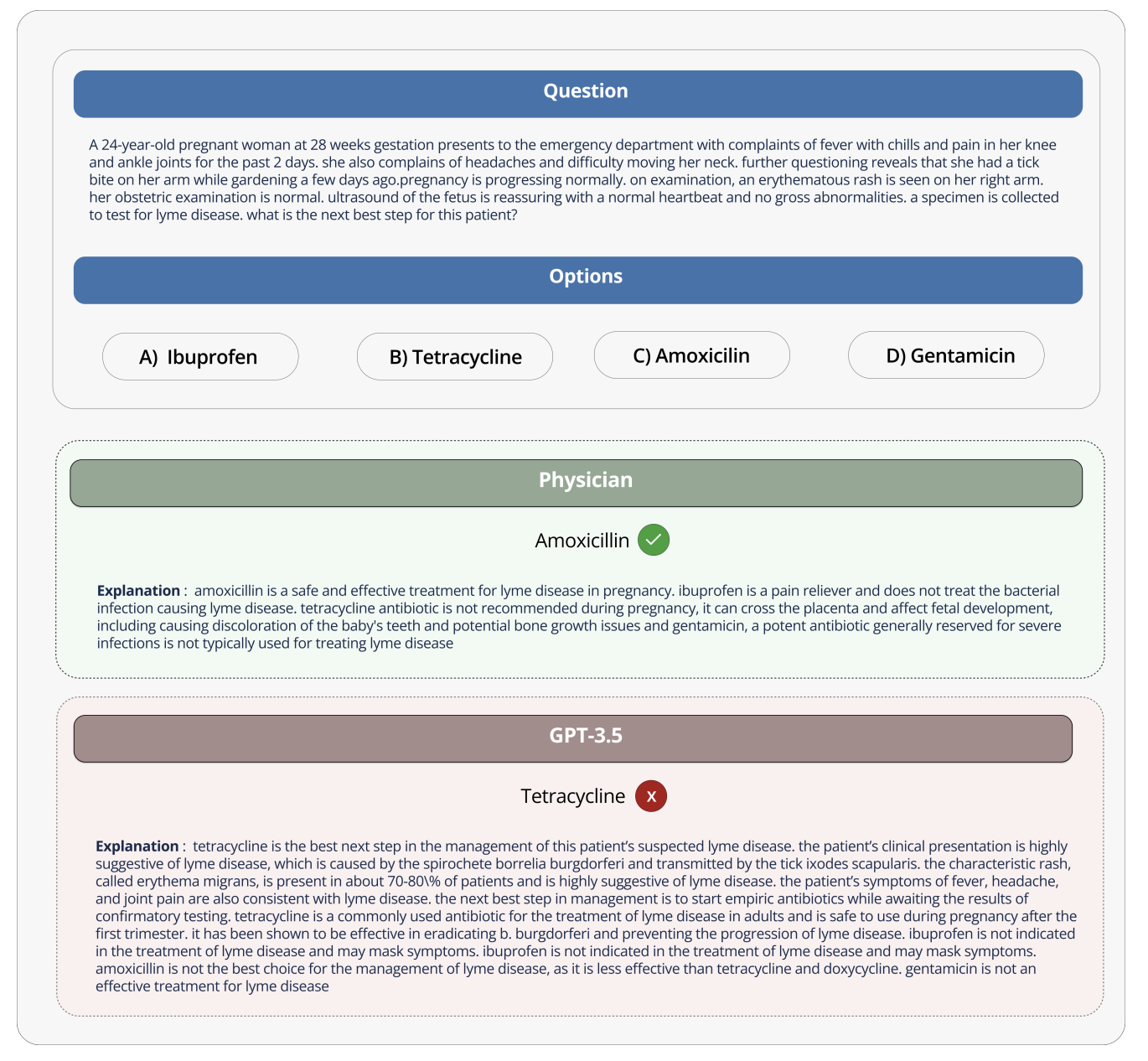

Symbol Credit: Hugging Face

Hugging Face is positioning the benchmark as a “robust assessment” of healthcare-bound generative AI fashions. However some clinical mavens on social media cautioned in opposition to hanging excess book into Obvious Clinical-LLM, lest it supremacy to ill-informed deployments.

On X, Liam McCoy, a resident doctor in neurology on the College of Alberta, identified that the space between the “contrived environment” of clinical question-answering and latest medical observe can also be relatively massive.

Hugging Face analysis scientist Clémentine Fourrier, who co-authored the weblog submit, indubitably.

“These leaderboards should only be used as a first approximation of which [generative AI model] to explore for a given use case, but then a deeper phase of testing is always needed to examine the model’s limits and relevance in real conditions,” Fourrier replied on X. “Medical [models] should absolutely not be used on their own by patients, but instead should be trained to become support tools for MDs.”

It brings to thoughts Google’s enjoy when it attempted to deliver an AI screening instrument for diabetic retinopathy to healthcare techniques in Thailand.

Google created a deep learning system that scanned images of the eye, on the lookout for proof of retinopathy, a important reason behind seeing loss. However in spite of top theoretical accuracy, the tool proved impractical in real-world testing, irritating each sufferers and nurses with inconsistent effects and a common shortage of cohesion with on-the-ground practices.

It’s telling that of the 139 AI-related clinical units the U.S. Meals and Drug Management has authorized to while, none use generative AI. It’s exceptionally tough to check how a generative AI instrument’s efficiency within the lab will translate to hospitals and outpatient clinics, and, in all probability extra importantly, how the results would possibly development over moment.

That’s to not recommend Obvious Clinical-LLM isn’t helpful or informative. The effects leaderboard, if not anything else, serves as a reminder of simply how poorly fashions solution unsophisticated fitness questions. However Obvious Clinical-LLM, and refuse alternative benchmark for that subject, is an alternative to in moderation thought-out real-world trying out.